|

World Conquest

July, 2009 | ||||||

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 | |

because ... well ... why not ...?

it's a dirty job, but somebody's got to do it.

- Friday, July 31st

- 22:04PM

- 22:04PM

- Pan-Galactic Pipes:

This being 2009 and all, it's probably a safe bet that you've decided to upgrade your home network to 10GBe (if you haven't already done so). At this stage of the game, that's less of a no-brainer than it was to make the jump to gigabit a few years back, because the variety of viable connectivity options and implementations is broader than it's been any time since back when you were struggling with the relative merits of token ring, 10base2, and Arcnet.

But we'll get to that topic later, probably next month because there isn't a lot of this month left. The less obvious question is the one that would normally come next: now that you've fitted your house with 10-gigabit pipes, how do you fill them?

When you think about it, especially from a historical standpoint, 10 gigabits per second is lot of data. That's about the theoretical bandwidth of PC133 SDR memory--so up through the Athlon and P4 systems that used 168-pin SDRAM, the CPUs weren't even talking to main memory at that rate. The entire PCI bus could handle only a little more than one gigabit per second from all cards combined.

Obviously things have improved quite a bit in just the last few years. The triple-channel DDR3 memory interface of the current i7 processors can support 20-25 gigabytes per second and a PCIe 2.0 x8 connection can theoretically handle 40 gigabits per second--though in practice the limits are likely to be less. I use PCIe 2.0 x8 as an example because that's what the latest-model top-end RAID and network cards support, though x8 and x4 PCIe 1.1 are also common, with half and a quarter the bandwidth. Still, even x4 PCIe 1.1 is capable of getting you within spitting distance of 10 gigabits per second real-world performance.

Today's top-end consumer magnetic platter hard drives struggle to pump out a sustained data rate of 100 megabytes per second. With a striped RAID array of ten or more such drives, you could fill a 10GBe link, at least as long as you stick mainly to sustained sequential reads of large files--like video, for example, but unlike databases, which tend to require a lot more random jumping back and forth over the disk surfaces.

So you might snap up, say, 16 1.5TB drives and, if you think losing 20+ terabytes of data would be a bad thing, set aside at least two drives for redundancy as RAID6 or RAID50, and you should be good to go. The best price I've gotten on 1.5Tb drives has been $85 a pop, so if you shop around, you could throw that together for $1360 in drives. Add a couple of RAID controllers, a motherboard and CPU, and wrap it all in something like the NORCO RPC-4020 4U server case with 20 hot-swappable SATA/SAS bays, and you could possibly squeak your 20TB NAS in under $2k.

(of course, by next year 20TB NAS units will probably be going for $499 out the door)

But $2k is still a respectable pile of change, and not every application needs 20TB+ of storage or is best served by that type of array. Nyx has a heterogenous assortment of login machines, email servers, web servers, etc., that pull the user files and settings off a dedicated NFS server. Currently, each system has its OS and system settings on its own local drive, but when a user logs in or accesses one of those machines, the user data is read off the NFS server, so it's the same regardless of which machine a user is on.

But it's not a huge amount of data, especially by today's standards: less than 100GB for 3000 users. So there are some other storage options that could potentially take a good-sized bite out of a 10Gb/s pie.

Storage test system: ASUS P5WDG2-WS Pro / Intel Q9300

Intel X25-E Extreme 64GB (slc) / 4 x Samsung MCBQE64GPMPP-MVA01 64GB (slc) / Intel X25-M 160GB (mlc)

I decided to use the ASUS P5WDG2-WS Pro because it had two PCIe x16 and two PCI-X 64-bit slots, giving me the most flexibility with the RAID and network controllers I have around. I wanted to toss in a quad-core chip so I'd at least have the option of comparing fileserving performance with two versus four cores. The Q9300 isn't on ASUS' supported CPU list right now, but it was for a while, and with BIOS 905 the board recognizes the chip and has had no problems so far. ASUS and Intel keep doing that--they'll list CPUs on their motherboards' supported lists for a while and then quietly take them off without comment. Drives people crazy, especially back when ASUS was shipping the boards with labels that loudly proclaimed their 45nm quad-core support, even though at the time the BIOS they had available wouldn't recognize any 45nm CPUs, dual or quad-core. But now that the boards and BIOS finally do support the 45nm quad-core CPUs, they've disappeared from the list.

Like any other computer or IT product, the documentation is always incomplete and often out-and-out wrong, and the only way to determine what actually does work is to get a pile of components and start experimenting.

So for the potential replacement for Nyx's current fileserver, I thought I'd see what I could do with solid-state drives. SSDs have low power consumption and very low random access times which make them well-suited for many types of usage patterns. But they are a very different kind of animal from rotating platter drives and while it's conceptually fairly easy to address their shortcomings (poor performance with small random writes), the implementation of those techniques is more difficult or impossible because filesystems and controllers were simply not designed with solid state drives in mind.

But that's changing--with the 2.6.30 Linux kernel, there are more filesystems to choose from than there are varieties of salad dressing in the grocery, including some like NILFS2 that were designed with SSDs in mind and others like BTRFS that have an "SSD Mode" that in the benchmarks I've seen usually performs more poorly on SSDs than its non-SSD mode. 3ware/AMCC/LSI made a big announcement recently about releasing new SSD-optimized firmware for their 9650SE RAID controllers, so you can see one of those in the picture above to try out. In case that model proves unsatisfactory, I have a few other RAID controllers from LSI, LSI, and...um...LSI. (It's not my fault; LSI just keeps buying up all the smaller manufacturers of RAID controllers.)

- Sunday, July 26th

- 14:41PM

- Fields of the Nehalem:

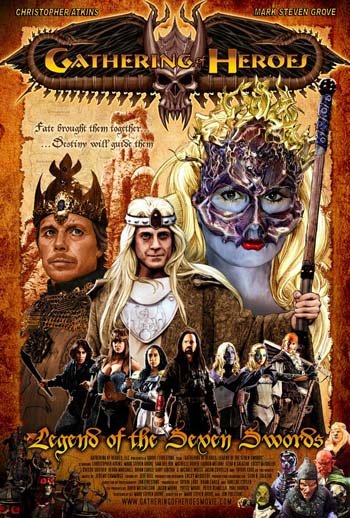

We're getting into the thick of CGI, compositing, and rendering on Gathering of Heroes. There's not only a whole lot of CGI effects, greenscreened performers, and virtual locations involved, but the source material was all shot in 4K resolution on the RED One camera, so there's a lot of data going in and out.

I've gotten in one seat of Maya Unlimited, which together with Zbrush, Poser Pro, and five copies of Adobe's CS4 Production Studio Premium, is giving the assortment of editing stations and artists down at the studio a pretty good workout.

Even though both Maya Unlimited and Adobe After Effects Pro allow you to use the other machines you have around as distributed rendering nodes, the more sheer CPU power you have at your fingertips, the more manageable it is to be moving around in a 4K virtual world. Some of the initial scene tests were chewing through upwards of 15 hours of render time per second of completed footage on a 2.66GHz 4-core i7 920 system. Jon Firestone has made a lot of progress at streamlining the rendering process without any visible loss in final quality or realism since then, but we're still talking about some serious computing demands no matter how much pre-computational optimization you do.

To bump up the bar another half-notch, I thought I'd build the next Maya workstation around the surprisingly small (and almost as surprisingly frill-free) dual-1366 "Nehalem" Z8NA-d6c motherboard from ASUS and a pair of 2.93GHz x5570 Xeons, the fastest currently available without jumping up to the 130 watt thermal envelope w5580 model (which isn't currently on the supported list for the Z8NA series).

ASUS Z8NA-D6C

dual-socket LGA1366 "Nehalem" ATX motherboard

After the last several years of 5xxx Xeons requiring the use of power-hungry (and more than a bit cash-hungry) FB-DIMMs, the new crop of 55xx Xeons will work with the same DDR3 memory that the single-socket i7s use. They'll support ECC and ECC Registered DDR3 too, but the fact that they'll work happily with the cheap stuff is a boon for workstation use. (I'd be more inclined to shell out the extra bucks for ECC/Registered or at least ECC for servers, but even then at least it's just registered memory, not the fully-buffered flavor).

The Nehalem boards are cheap, too--at least the lower-end ones. ASUS' Z8NA-d6c will set you back about as much as their basic P6T single-LGA1366 x58 board; you can even get the next model up, the Z8NA-d6 (which adds another 8 SAS ports and the ability to add some additional optional components...at additional optional prices) for less than any L337 Gamer x58 single-socket i7 board runs.

The minimalistic price does get you a pretty minimalistic board: two LGA1366 sockets, six DDR3 slots, one x16 and two x8 PCIe, and a single PCI slot round out the board. You get two RJ-45 network ports, PS/2 keyboard and mouse, and only two USB ports on the back--no firewire, no integrated sound at all, and that's it. But for my purposes, that's sufficient--and for that price, I can add my own firewire and audio cards.

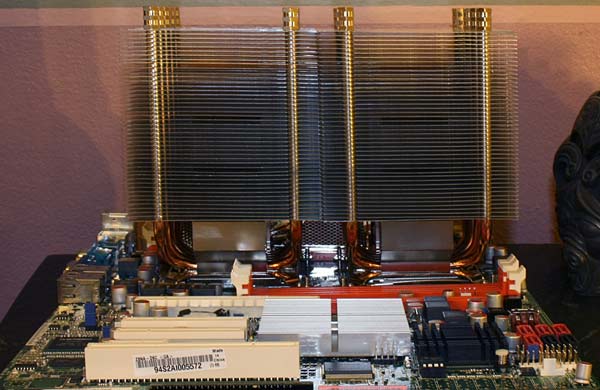

ASUS Z8NA-D6C with dual Intel x5570 Xeons

(and a pair of Scythe Mugen 2 CPU coolers)

So most of the board's real estate is taken up by memory slots and CPU sockets. That has the potential to make things a fairly cramped fit, which is probably why ASUS states in the Z8NA series manuals that you should only use a "qualified" heatsink when installing a CPU. Now, a lot of computer techs would probably have an easier time with this step of the process if ASUS made a list of qualified heatsinks and CPU coolers available, but they don't. I've found absolutely no hints or suggestions for what CPU coolers will and won't fit on ASUS's product pages, support pages, FAQs, or forums.

So, naturally, I figured I'd give the biggest and best CPU coolers I had on hand a try--a pair of Scythe Mugen 2 SCMG-2000. As you can see above, it's a perfect fit.

Mounting hardware for the Scythe Mugen 2 CPU coolers

(note the snippage done to the top and bottom left "fingers" of the right mounting plate )...almost perfect, anyway. Regardless of what Yahoo Internet Life claimed in their bio piece on me some years back, I don't keep having to take a hacksaw to the servers out here because I *want* to, I do it because I *have* to. In this case I only had to trim back a couple of attachment points that would only have been used on a different kind of motherboard anyway. Doesn't affect the mounting strength or straightness on an LGA1366 board, whether single or dual.

The fins of the two Scythe Mugen coolers do overlap by about 3mm. Apart from the more intimate arrangement than is usual for CPU coolers, this didn't make the mounting procedure more difficult and it shouldn't significantly impede airflow. The resulting "Mugemini" arrangement lends itself perfectly to a front-to-back push-pull arrangement using the standard included 120mm fans and mounting clips, but my current inclination is to assemble a frame and a bit of ductwork to direct the airflow vertically upwards through the fins. The eventual airflow implementation will depend on which case I decide to put the board in, so stay tuned.

Anyway, back to testing and configuration. As you can probably tell, I still need to fill out the rest of the memory, add cards, cables, drives, and even an operating system, but especially when I'm putting together a new type of build, I tend keep adding one part at a time and make sure it all still works before moving on to the next. This'll turn into a complete computer before you know it. Just you watch!

- Monday, July 20th

- 21:02PM

- Infrastructure IV: Short, Cute, and Absurdly Noisy:

Yeah, I'm sure you know a few like that, too.

A lot gets said these days about making energy-efficient, quiet computers, and in large, a great deal of progress has been made in that regard, especially since the end of the netburst era. The last two incarnations of Kanga, thanks to Antec P180 sound-deadening cases, a Thermalright HR-01 semi-passive heatsink (connected to one of the 120mm exhaust fans of the P180 with a flexible duct) in the case of Kanga Mark IV, and a Scythe Mugen 2 in the case of Kanga Mark V, and Antec Phantom passively-cooled power supplies (which really are perfectly designed for the airflow pattern of the P180, though the 12v cable is just short enough that you pretty much have to connect it to the motherboard first and only then wrestle the power supply into the case).

My hope had been to take advantage of some of this cooler, quieter technological innovation down in the servers' quarters, too. To some extent, I already have on my own, replacing several older machines with quietly-cooled Core 2 based systems and a few mobile-on-desktop motherboards, which will use regular desktop (or server) add-in cards, but notebook CPUs (which require considerably less power and cooling than their desktop counterparts, generally at the expense of reduced clock rates).

But the server area is certainly not quiet, and not only is quiet and low-power a good thing on general principles, noisy servers have the disadvantage over other machines that you can't just turn them off when the sound gets in the way. When we're shooting movies in other parts of the house, we often shut off the refrigerators (and, ideally, remember to turn them on again when we're done), but doing the same with the servers causes problems much more severe than mooshy ice cream.

You might think that, at least, the servers are off at one end of the basement and, thus, not often a problem for a movie's location sound, but you'd be surprised how often people want to shoot movies down there--both for the serverific ambiance of the floor-to-ceiling technocobble and for the adjoining weight room.

Besides, you wouldn't want the servers to be *off* because the flickering glow of all those flashing lights is an essential part of the charm...you'd just prefer them to do their flashing more quietly and any noises that you later on decide that you *do* want, you can add in post.

The current farm of servers down there is a more-or-less random collection of rackmount servers, converted workstations, and unclassifiable products of ten+ years of building, upgrading, and repairing what was on hand. There's a SparcServer 20, a Pentium-mmx system or two (and, I think, one pre-mmx Pentium 90), a couple of dual PII or PIII Xeon servers, and a few older drive arrays stocked with half-height 3-1/2" 10K drives. All stuff that long predates the current universal availability of components designed to be efficient and quiet-running.

So, there's certainly ample room for improvement, noise-wise. According to my handy Radio Shack sound level meter, the current sound level was 54dB, measured half a meter in front of the racks, pretty much regardless of where you go. Since there are currently entire walls of computers and networking equipment, the sound level doesn't change much as you walk around or step back from the racks, at least until you get ten feet or more away.

You've already heard my surprise that the Cisco 3745 router was so much louder than the 3620 models I'm currently using, despite the fact that the 3620 is a 1u design, versus the 3745's 3u. I guess you can chalk that up to a hefty dose of design negligence, but with luck that's the exception....

...so I fired up a few of the more recent model servers I'm testing, plugged into a Kill-A-Watt power meter and set up the aforementioned Radio Shack sound level meter on a tripod, half a meter in front of each machine in turn:

model configuration cores / threads / GHz power

consumptionnoise

levelSunFire v440 CPU: UltraSparc IIIi 1.28GHz x 4

RAM: 16GB (1G x 16)

HDD: 73GB 10K u320 x 4

Video: XVR-5004 / 4 / 1.28 522w 65.5dB SunFire v240 CPU: UltraSparc IIIi 1.28GHz x 2

RAM: 8GB (1G x 8)

HDD: 146GB 15K u320 x 1

Video: XVR-1002 / 2 / 1.28 294w 64dB SunBlade 2500

silverCPU: UltraSparc IIIi 1.6GHz x 2

RAM: 8GB (1G x 8)

HDD: 64GB Samsung SLC SSD- MCCOE64G8MPP

Video: XVR-12002 / 2 / 1.6 285w < 54dB Sun Ultra2 CPU: UltraSparc II 296MHz x 2

RAM: 2GB (128M x 16)

HDD: 9GB 7.2K u3 x 2

Video: Creator3d series 22 / 2 / 0.296 151w < 54dB SunFire T1000 CPU: T1 "Niagara" 1GHz / 6 core

RAM: 32GB (4G x 8)

HDD: 64GB Samsung SLC SSD- MCCOE64G5MPP

- x 2 / RAID 0

Video: none6 / 24 / 1.0 150w 75dB Cisco 3745 CPU: R7000 CPU at 350MHz

RAM: 512MB (256M x 2)

HDD: 4GB Sandisk Extreme III CF x 2

Video: none1 / 1 / 0.350 58w 60dB So much for quiet computing. I'd thought it was bad enough that one late-model Cisco 3745 was 6dB louder than an entire room full of 30-odd ten-year-old computers, but apparently the designers down at Sun Microsystems have taken it upon themselves as a personal challenge to make any new model louder than the previous ones. I can only imagine it's the same motivation that makes that annoying neighbor kid sit in his beat-up but usually functional muscle car and rev the engine for two hours straight...except that in the case of the computer engineers, there's far less hope that they will eventually grow out of it or, failing that, head off to college.

The late-90's vintage Ultra 2 isn't the quietest computer around, but it's quiet enough to be unnoticeable in the general whir of the server room fans. The more modern SunBlade 2500 Silver isn't loud enough to make much difference on the sound meter, but it is louder--loud enough that it does stand out from the crowd if you're nearby.

But the rest of the Sun machines leave every server I've ever had running down there--even the dual Athlon MP machine with its aptly-named pair of "volcano" cooling fans--completely in the dust when it comes to sheer sound power. The v240 and v440 each cranked out upwards on ten dB more than the whole server room. The T1000, adorably cute as it is to look at, puts out 21dB more. That represents 100 times as much sound power as the rest of the servers in the room put together.

With the T1000, the designers obviously felt that "incessantly revving muscle car" or "shop vac" wasn't a difficult enough goal and instead were striving for something between "air raid siren" and "Who Concert."

But one thing I have to admit is that it pumps an absolutely insane amount of air for a short-length 1u box. When the Nyxinator powers up, loose papers eight feet behind the back of the machine will start flying around--but since the entire system only burns 150 watts, it's still just cold air coming out the back. If the fan belt on your old Chevy ever snaps, strapping a SunFire T1000 onto the grille would probably be more than sufficient to keep the engine cool, and it really would have the highest sustained network transaction rate of any commonly available hood ornament.

Don't get me wrong; I'm having all sorts of fun testing and playing with possible upgrades--I just didn't expect that the most difficult part of the process would be figuring out how to make the new machines quiet enough to cohabit with. I'd actually been expecting getting the bugs and incompatibilities worked out between a roomfull of disparate hardware, software, and operating systems would be the more challenging and time-consuming part. Shows you what happens when I don't keep up with the state-of-the-art for a while.

- Friday, July 10th

- 21:13PM

- Infrastructure III, The Nyxinator:

The biggest portion of my current infrastructure experimentation is directed towards possible upgrade ideas for Nyx.Net (the oldest operating public-access ISP, which happens to live in the basement). I've pretty much rebuilt Nyx entirely top-to-bottom about three times over the years, and individual machines have been replaced, repaired, or upgraded more frequently--for quite a while, it was necessary to upgrade Nyx's mailserver every four to six months just to keep up with the rising spam levels and the necessary computational power to deal with it.

Sun Ultra2 Workstations

(Nyx's current main login machines)Nyx's current main login machine is a dual 296MHz UltraSparc2 machine, which is a fair bit removed from the state-of-the-art these days. It's still running strong, but it's not getting any younger (not that any of us are), and I only have, um, fifteen spares in case it explodes.

...yeah, that's not really an "only." Actually, it's one of those stacks of equipment that I look at and think that I really didn't need to get *that* many systems. Unfortunately, they're not even all that decorative. Nobody is going to look at a six-foot-something stack of Sun Ultra 2's and think, "wow! that's a high-tech looking pile of hardware!"

So I'm toying with various ideas for revamping Nyx's structure and components, and certainly one of the coolest candidate machines is Sun's SunFire T1000 server:

SunFire T1000

"The Nyxinator"

Which, as I'm sure you've noticed, is tiny. Sun's version of the T1000 even makes Robert Patrick look big by comparison. (I know I should have included him in this shot just for scale, but he was on another project this evening.)

SunFire T1000 "Coolthreads" topless

The T1000 is the junior member of what Sun refers to as their "Coolthreads" server line. Not only is the case tiny--just over half as long back-to-front as Sun's other 1U servers--but when you lift off the cover, you can see that the active guts *are* larger than an average sandwich, but not by much, and that's only when measuring side-to-side. Vertically, the whole business is not much thicker than a slice of Wonder Bread.

You'll also notice that it's lacking a few things you might be used to: there's no video port, no keyboard or mouse connection, no optical drive for installing software or anything else, no USB ports that you could use to connect a keyboard, mouse, optical drive, or thumbdrive (in case you were imagining you could install software that way). There aren't even any available SCSI, SATA, IDE, or floppy drive ports on the motherboard that you could sneak a cable to from outside. The T1000 comes with a serial console port and a bunch of network ports. All the initial setup, booting, loading of software or files, etc., is done over the network. Apart from that, you have only two other controls on the box: a small pushbutton that turns on a flashing LED on the front and back of the machine--in case you have trouble finding it in the dark--and a power cord that can be yanked out to initiate emergency shutdown procedures.

"The Nyxinator"

(naked and ready for action!

This particular model has just one CoolThreads "Niagara" T1 6-core cpu, but each physical core handles four threads simultaneously, so from the standpoint of a user or the OS, it looks like 24 CPUs. Those remarkably short memory DIMMs (you can see they don't even come up to the height of the clips on the DIMM sockets) pack 4GB each, so there's 32GB of ECC Chipkill memory in that slice of Wonder Bread.

On the upper-left is a pair of 64GB Samsung MCCOE64G5MPP SLC solid-state sata drives running in Raid0 using the onboard SAS Raid controller, and on the lower right is Sun's own X1027A-Z (often known as fru# 501-7283 to its friends) dual-port 10GBe network adapter. Despite having two XFP ports, Sun cautions that while each port individually will fully support 10 gigabit data rates, if you try to saturate them both simultaneously, the card can only handle 16 gigabits in each direction. I guess if you were really determined, you could team it with the four one-gigabit ports and squeeze 20 gigabits per second in and out that way.

It'll have to do. Getting by with what I can scrounge up on the surplus market, sometimes I have to make compromises.

...But then I have to step back for a moment and ask myself, "does a little home network really *need* 10GBe?"

Well...yeah, of course it does. What was I thinking?

- Wednesday, July 8th

- 18:28PM

- Infrastructure, Part II:

Moving to more modern desktop computers with 12 Gigabytes of memory apiece entailed switching to a newer operating system and, yes, I'm still using Windows-based machines on my desk. On the whole, I'm more pleased with Vista 64 at its current level of maturity than I expected to be--besides being able to deal with more than 4GB of memory and 8 or more virtual cores, it does have the highly desirable distiction of being (in my experience, anyway) the most stable Microsoft operating system since NT 4.0. That's a very pleasant change after the much more limited running time between crashes that plagued Windows 2000 and XP.

I do think Microsoft's operating systems age backwards, though. NT 4.0 seemed a mature, stable, established sort of OS. Not very flexible or willing to accept new devices or any other kind of change, but it was dependable and responsible and did as it was told. Windows 2000 was more like a fresh, new MBA graduate--not quite so steady or stable, but it was better at coping with new hardware and drivers. By Windows XP, Microsoft was just hitting its upper teens, and now with Vista, Microsoft has reverted all the way back to the toddler stage--always wanting attention and really, really enthusiastic about having just discovered the word, "no."

I am constantly grateful that the legal consequences of beating Vista with a stick to get it to behave are so much milder than what generally ensues from treating a human toddler the way Vista so richly deserves.

I am getting perilously close to just giving up on Firefox after having previously converted over to it on pretty much all the machines around here. On my desktop machines, I'd stuck with Firefox 1.5 all this time despite its constant pleading for me to upgrade, but as long as I was doing a fresh install of a new OS on Kanga and Roo, I decided to "upgrade" to Firefox 3.0.

While Firefox 1.5 tended to go about two weeks between crashes, Firefox 3.0 struggled to make it through two hours, even when running in "safe mode" and/or with all the plugins and add-ons disabled. One or two visits to any pages on Sun.com are pretty much guaranteed to make it lock up or crash immediately. I switched to 3.5 beta 4 and that was certainly better--it could usually make it through 6-10 hours between crashes. Then, since I'd neglected to disable the automatic updates, it "upgraded" itself to RC1 one morning, and RC1 usually can't even finish loading before it crashes. Being perverse, I tested it a few times--mysteriously, most of the time, when trying to start 3.5 RC1, it would crash 7 times and then start successfully on the 8th try. Over and over again. Why 7? Why not 6? Or 12? And why did I actually sit there and keep testing this weird quirk?

I went back to beta 4 and got by with only a few crashes per day until the actual 3.5 release version came out. The non-beta version 3.5 also crashes at least a few times a day, but they are official, non-beta crashes now.

That's on two i7 machines with fresh installs of Vista 64. On a 1.1GHz PIII laptop running XP, the 3.5 release pegs the CPU at 100% just to display a blank page and takes anywhere from five seconds to more than a minute to respond to an individual keystroke or mouse click.

I haven't entirely kicked out Firefox yet, but I have had to get into the habit of keeping an Explorer window open for anything on Sun.com or where I'd rather not have the browser crash in the middle of whatever I'm doing.

Cisco 3620 Routers

(the current setup)Doing much typing and OS-beating is a bit slower with my right arm out of commission, but at least it's a task a lot better suited for recuperation than building the new editing systems would have been. But, since I've never been one for moderation, the next part of the treehouse infrastructure I thought I'd look into is the router.

Currently, the connection between the treehouse external network (the one with all the publically accessible machines) and the rest of the world goes through a Cisco 3620 and into a T1. Believe it or not, there was a time when that seemed pretty good--and back when they were new, it certainly seemed like a pretty big step up from the 2500 series routers I'd had before. (Anybody want some of those? I still have a stack of 2500 series routers piled up somewhere.)

At least for me, this kind of thing involves a lot of research, testing, and puttering around with configuration options--again, something I'm reasonably able to do with one hand. These days, it's fairly unlikely that I'm going to have to break out the soldering iron again to upgrade the internet connection.

(As an aside, the Cisco 3620 above does have the distinction of being the last piece of network hardware that I actually did any component-level diagnosis and repair on--but that was back when a model 3620 cost as much as a modestly-priced used car rather than a modestly-priced used Matchbox car, something it reached price-parity with on Ebay back around October of 2007.)

For a variety of reasons, security issues certainly among them, I wanted to update the router's IOS version and, unfortunately, all of the Cisco routers that had the features I wanted and were supported under the 12.4 IOS releases were models that were going for more on Ebay than a limited edition McDonald's Happy Meal toy.

Okay...*most* limited edition Happy Meal toys. I didn't mean one of the mint-condition, still-in-the-plastic-baggie limited edition McDonald's Happy Meal Toys...I meant one that's obviously already been played with a bit and maybe has a few bite marks, but the head (at least I think that was the head) is still pretty much attached or at least included.

The first model I started playing with was the Cisco 3745. You can see here that I tossed in an NMD-36-ESW-2GIG 36-port 10/100 plus 2-port gigabit module, mainly because the 3745's built-in ports only support 10/100 and I wanted to have at least *one* gigabit port on there, just as a matter of principle.

Cisco claims that the 3725 and 3745 routers only support internal and external compactflash cards up to 128MB, but at least with IOS version 12.4(15)T8, they'll very happily handle anything I can stuff into their slots. When they format a card above 2GB, they automatically switch to FAT32 and have no problem reading or writing that format. Even if you don't need the extra space, swapping out one of the tiny Cisco-approved flash cards with a more modern higher-capacity model results in the router loading the software image in half the time or less, because the real Cisco-labeled cards are some of the slowest around.

Cisco 3745 Router

(candidate #1)

Unfortunately, the specified memory limits of 256MB and 512MB for the 3725 and 3745 routers aren't so easily broken. If you scare up the right kind of module (low-density, 16x8 chips), they actually do recognize memory above that amount. but I couldn't get either of them to boot consistently with more than their stated max memory installed, so I imagine there's an addressing issue with other components living in the memory space above 256/512MB. If you've seen the bootup screen roll past as many times as I have, telling you how much memory it detected and that ECC is disabled, you've probably wondered what would happen if you swapped out the Cisco standard unbuffered, non-ECC memory with a stick or two that was brimming with ECC goodness. Well, what happens is that the router just claims it's bad or unsupported memory and won't boot (unbuffered or, for that matter, buffered).

so you're just stuck with watching it tell you "ha-ha! You don't get ECC with this model!" every time it boots.

Cisco 2851 Router

(candidate #2)Another candidate I'm considering is the Cisco 2851 router, which is the top of the 2800-series line. That gives you two gigabit ports built in and up to 1GB of RAM. It doesn't have as much space for add-in components as the 3745, but it has 3 PVDM slots built into the mainboard, whereas you'd have to buy an add-in module if you wanted to have those or the gigabit ports in a 3700-series box.

However, at least while running IOS 12.4(23), the 2851 would *not* read anything formatted FAT32 (whether on a PC or one of the 3700-series routers). If you put in a memory card bigger than 2GB, you can get it to format it and it will read and write to it...but it reports the available space as negative and, depending on how the rest of the library is implemented, it's pretty likely that once you try to write beyond the 2GB boundary of the drive (which may not require that 2GB actually be used if you have deleted or re-written some of the files on the device since you formatted it) it'll "wrap" and overwrite some of your existing files with data from other files, leaving you with corrupt software images on your flash drive.

It's all the more odd that the 2851 would have a 2GB limit when the 3700-series routers don't: not only is the 2851 a current model, it also has a pair of front-panel USB ports which suffer from exactly the same limitations...and the great majority of USB thumbdrives that you can buy these days or that people are using are larger than 2GB. (But stay tuned; there's no reason that this limitation would be imposed by the hardware; it's almost certainly a software issue and may not be a problem with other IOS versions.)

Cisco rates the 2851 at 220Kpps / 112.64Mbps. That's awfully close to the 225-250Kpps / 115.2-128Mbps rating of the 3745 and easily beats the 3725's 100-120Kpps / 51.2-61.4Mbps. Any of these completely blow away the paltry 20-40Kpps / 10-20Mbps ratng of the 3620 (or the 4.4Kpps / 2.25Mbps for the 2500 series routers I used before that).

Between the 3745 and 2851 models, the 2851 is probably the more practical choice: longer support lifespan (probably), more memory, and broader support for newer add-in modules and, presumably, modules-to-come. (The 2801, 2811, and 2821 models are more limited in this respect, but since the 2851 is the top of the current 2800 line, Cisco grudgingly allows more of its modules to work, even if you didn't go and buy a 3800-series router like they really wanted you to.)

...and there's that noise issue again. The front panel of the 3745 consists entirely of a decotative grille covering a perforated metal assembly of four large cooling fans...all of which are set to run continuously at "shop vac" levels. I have more than thirty machines running 24/7 down in the servers' quarters, but when I would power up the 3745 for testing, you could hear that one little machine two flights of stairs up.

Measured at the outlet, a fully-functional 3745 chugs away at only 58 watts when running--so why design it to double as wind-tunnel? I don't think I've seen a quad-Xeon server that gets this much ventilation. The 3620 is a 1U design and it certainly doesn't make anything remotely close to the racket of the 3745, despite the handcap of its small form-factor. I've been unable to find any sign that the fan speed or noise level can be brought down to more reasonable levels, short of breaking out that soldering iron I told you I wasn't going to use and adding a speed controller or replacing the fans with low-airflow models.

The 2851, on the other hand, has automatic fan speed control that kicks in as soon as the unit boots up and keeps the fans running at a level that's practically whisper-quiet compared to the 3745. It may not reach the levels of silence that my upstairs computers do these days, but the little bit of noise that it does make is quite lost amidst the other servers down on that level.

The only real drawback is having to read the occasional bit of Cisco's documentation where they say, "this module/function/option is not supported on the 2800 series because if you wanted that kind of service, you should have ponied up the extra bucks for a 3800 series box, you cheapskate, you."

Okay. Okay. I'll go check under the couch cushions again. Maybe I missed some loose change the last time.

- Saturday, July 4th

- 15:53PM

- Infrastructure, Part I:

In the brief window between finishing up taxes and accounting and going in to have my arm re-attached, I figured I'd get in a few systems upgrades out here at the treehouse. The two main computers I have at my desk are Kanga and Roo, and I'd already replaced Roo (mark IV) with a new Roo (mark V). I'd originally been thinking of building the new version of Kanga around a dual-core Intel e8400 CPU, which combines a reasonable degree of speediness and low-power-use-ness. Roo is generally dedicated to video rendering and Kanga handles everything else--graphic design, web browsing, typing and editing--so Kanga's workload really doesn't benefit very much from more than two CPU cores, at least with current software.

However, I was feeling rather frustrated by the incredible slowness of editing large graphic files for magazine ad layouts, poster artwork, and other large-format ads, and it looked at the time that the cheapest way to get a little more memory was to go with another i7-based system, which easily acommodates 12GB instead of 8GB with 2GB DIMMs (4GB DIMMs being a little more pricey).

I don't play games, but I did want a video card with CUDA support, so I initially put in an ASUS "Dark Knight" 1GB 250GTS (yes, I know, it's essentially a reloaded 9800GTX) because of the manufacturer's claimed low power consumption and low noise levels. ASUS may not have been lying about the power consumption, but the card wasn't "quiet" in the sense of something you'd want to be in the same room with; it was "quiet" in the "as compared to commercial jet aircraft built between 1972 and 1974" sense.

(Initially, I thought that it might be that I needed to adjust the fan speed to something more reasonable than "L33T Gamer Overclock full-on blower" mode, but when I installed the fan control software, it turned out that the "too loud to share a room with" level *was* its quietest mode. At L33T Gamer settings, this particular card no longer had the distinction of being quieter than most commercial jet aircraft built between 1972 and 1974.)

So I replaced it with an MSI 260GTX. Even though it's the noisiest part of the system, that one really is a pretty quiet card.

Kanga (Mark V)

For the motherboard I used an ASUS P6T non-deluxe version: personally, I thought the layout of the "deluxe" version was less useful than the non-deluxe. As best I could tell, other than the higher price tag, all you got for buying the deluxe version was having the PCIe slots next to each other instead of spaced out (which is a problem for a lot of video cards and RAID controllers).

You can see I used another Antec Phantom silent power supply and Dell Perc5i (basically an LSI 8408e with the connectors on the inside) just like I did in Kanga (mark IV). Kanga's drive needs are a lot more modest than Roo's, so I set up four 1TB drives in RAID5 on the Perc for data and added a single 160GB Intel x25-M for a boot/system drive. It works.

Roo (mark V) is actually almost the same, just with a little more ooomph added here and there: a Gigabyte GA-EX58-UD5 motherboard because I just had a piece of video take 52 hours to render, so *maybe* I do want to start doing some overclocking after all. Eight 1.5TB drives in RAID 5 for the data array because all this 4K video adds up real fast; a 300GB Velociraptor drive for boot/system; a BFG 285GTX video card in hopes that CUDA will actually amount to something with heavy-duty video work; and a PC Power and Coooling 750 Watt power supply. So Roo is not nearly as quiet as Kanga, but it's not bad at all. All together, certainly less than 10% of the sound output of the ASUS "Dark Knight" video card.

With 15 terabytes on my desk, I should *not* have it halfway filled up by now. When did a terabyte turn into something tiny?

- Sunday, July 26th

|

Trygve.Com sitemap what's new FAQs diary images exercise singles humor recipes media weblist internet companies community video/mp3 comment contact |

|

|